- 什么是Filebeat?

Filebeat是Beat成员之一,基于Go语言,无任何依赖,并且比logstash更加轻量,非常适合安装在生产机器上,不会带来过高的资源占用,轻量意味着简单,所以Filebeat主要作用是将收集的日志原样上报。

- Filebeat的作用是?

Filebeat的作用主要是用于转发和汇总日志与文件

- Filebeat的应用场景?

我们要查看应用的一些log, 比如nginx log, php error log, mysql 的一些log, 我们往往需要登录到服务器上(或者我们没有权限登录服务器),而是让运维把log传给我们,我们再去查看,如果服务器的数量比较少的话,还比较容易操作,但是如果服务器的数量成百上千的话,那这个拿日志的操作就比较繁琐了。如果我们使用Filebeat后,我们可以把filebeat部署到应用的每台机器上,它就可以帮我们收集服务器上的各种log, 然后在我们的可视化界面当中展示出来,这就是filebeat主要的作用

Filebeat内置了很多模块(auditd, Apache, nginx, system, mysql等等),可针对常见格式的日志大大简化收集、解析和可视化过程,只要一条命令即可。

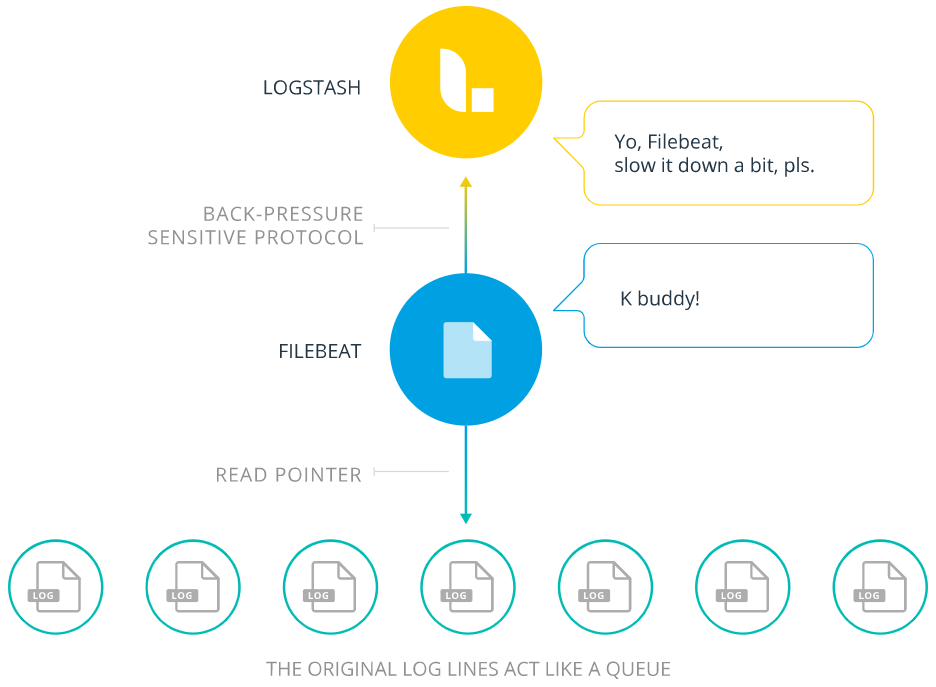

Filebeat 不会导致管道过载,以下是官网的一段介绍:

当将数据发送到 Logstash 或 Elasticsearch 时,Filebeat 使用背压敏感协议,以应对更多的数据量。如果 Logstash 正在忙于处理数据,则会告诉 Filebeat 减慢读取速度。一旦拥堵得到解决,Filebeat 就会恢复到原来的步伐并继续传输数据。

Filebeat架构:

Here’s how Filebeat works: When you start Filebeat, it starts one or more inputs that look in the locations you’ve specified for log data. For each log that Filebeat locates, Filebeat starts a harvester(中文被翻成收割机). Each harvester reads a single log for new content and sends the new log data to libbeat, which aggregates the events and sends the aggregated data to the output that you’ve configured for Filebeat.

What is a harvester?

A harvester is responsible for reading the content of a single file. The harvester reads each file, line by line, and sends the content to the output. One harvester is started for each file. The harvester is responsible for opening and closing the file, which means that the file descriptor remains open while the harvester is running. If a file is removed or renamed while it’s being harvested, Filebeat continues to read the file. This has the side effect that the space on your disk is reserved until the harvester closes. By default, Filebeat keeps the file open until close_inactive is reached.

部署与运行?

下载: https://www.elastic.co/cn/downloads/past-releases#filebeat

cd /opt/

tar -xvzf filebeat-6.2.4-linux-x86_64.tar.gz

[root@VM_IP_centos opt]# cd filebeat-6.2.4/

[root@VM_IP_centos filebeat-6.2.4]# ls -lh

total 48M

-rw-r--r-- 1 root root 44K Apr 13 2018 fields.yml

-rwxr-xr-x 1 root root 47M Apr 13 2018 filebeat

-rw-r----- 1 root root 51K Apr 13 2018 filebeat.reference.yml

-rw------- 1 root root 7.1K Apr 13 2018 filebeat.yml

drwxrwxr-x 4 www www 4.0K Apr 13 2018 kibana

-rw-r--r-- 1 root root 583 Apr 13 2018 LICENSE.txt

drwxr-xr-x 14 www www 4.0K Apr 13 2018 module

drwxr-xr-x 2 root root 4.0K Apr 13 2018 modules.d

-rw-r--r-- 1 root root 187K Apr 13 2018 NOTICE.txt

-rw-r--r-- 1 root root 802 Apr 13 2018 README.md- 下面我们来配置下filebeat, 先让他从标准输入(键盘)接收数据:

cp /opt/filebeat-6.2.4/filebeat.yml /opt/filebeat-6.2.4/test.yml

vim test.ymlfilebeat.prospectors:

- type: stdin

enabled: true

output.console:

pretty: true

enable: truetype: 指定输入类型,可以是stdin, 也可以是log(文件),可以指定多个input, enable表示是是否启用

output.console. 表示是否启用终端输出

- 启动filebeat和测试效果

[root@VM_IP_centos filebeat-6.2.4]# ./filebeat -e -c test.yml

2020-06-13T06:40:22.290+0800 INFO instance/beat.go:468 Home path: [/opt/filebeat-6.2.4] Config path: [/opt/filebeat-6.2.4] Data path: [/opt/filebeat-6.2.4/data] Logs path: [/opt/filebeat-6.2.4/logs]

2020-06-13T06:40:22.290+0800 INFO instance/beat.go:475 Beat UUID: d29dba4a-be63-483a-8d15-35d78f1fa68e

2020-06-13T06:40:22.290+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.4

2020-06-13T06:40:22.290+0800 INFO pipeline/module.go:76 Beat name: VM_16_5_centos

2020-06-13T06:40:22.290+0800 INFO instance/beat.go:301 filebeat start running.

2020-06-13T06:40:22.290+0800 INFO registrar/registrar.go:73 No registry file found under: /opt/filebeat-6.2.4/data/registry. Creating a new registry file.

2020-06-13T06:40:22.291+0800 INFO [monitoring] log/log.go:97 Starting metrics logging every 30s

2020-06-13T06:40:22.298+0800 INFO registrar/registrar.go:110 Loading registrar data from /opt/filebeat-6.2.4/data/registry

2020-06-13T06:40:22.298+0800 INFO registrar/registrar.go:121 States Loaded from registrar: 0

2020-06-13T06:40:22.298+0800 WARN beater/filebeat.go:261 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2020-06-13T06:40:22.298+0800 INFO crawler/crawler.go:48 Loading Prospectors: 1

2020-06-13T06:40:22.298+0800 INFO crawler/crawler.go:82 Loading and starting Prospectors completed. Enabled prospectors: 1

2020-06-13T06:40:22.298+0800 INFO log/harvester.go:216 Harvester started for file: -

#Harvester started for file 表示filebeat已经启动

hello #这里是我在命令行的一个输入

{

"@timestamp": "2020-06-12T22:40:38.439Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.2.4"

},

"message": "hello",

"prospector": {

"type": "stdin"

},

"beat": {

"name": "VM_IP_centos",

"hostname": "VM_IP_centos",

"version": "6.2.4"

},

"source": "",

"offset": 6

}#filebeat两个启动参数

-c, --c string Configuration file, relative to path.config (default "filebeat.yml")

--cpuprofile string Write cpu profile to file

-e, --e Log to stderr and disable syslog/file output (输出到标准输出,默认输出到syslog和logs下)

-d, -d "publish" 输出debug信息- 读取文件

filebeat.prospectors:

- type: log

enabled: true

paths:

- /tmp/logs/*.log

output.console:

pretty: true

enable: true#启动

[root@VM_IP_centos filebeat-6.2.4]# ./filebeat -e -c test.yml

2020-06-13T07:06:49.189+0800 INFO instance/beat.go:468 Home path: [/opt/filebeat-6.2.4] Config path: [/opt/filebeat-6.2.4] Data path: [/opt/filebeat-6.2.4/data] Logs path: [/opt/filebeat-6.2.4/logs]

2020-06-13T07:06:49.189+0800 INFO instance/beat.go:475 Beat UUID: d29dba4a-be63-483a-8d15-35d78f1fa68e

2020-06-13T07:06:49.189+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.4

2020-06-13T07:06:49.190+0800 INFO pipeline/module.go:76 Beat name: VM_16_5_centos

2020-06-13T07:06:49.190+0800 INFO instance/beat.go:301 filebeat start running.

2020-06-13T07:06:49.190+0800 INFO registrar/registrar.go:110 Loading registrar data from /opt/filebeat-6.2.4/data/registry

2020-06-13T07:06:49.190+0800 INFO registrar/registrar.go:121 States Loaded from registrar: 0

2020-06-13T07:06:49.190+0800 WARN beater/filebeat.go:261 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.

2020-06-13T07:06:49.190+0800 INFO crawler/crawler.go:48 Loading Prospectors: 1

2020-06-13T07:06:49.190+0800 INFO log/prospector.go:111 Configured paths: [/tmp/logs/*.log]

2020-06-13T07:06:49.190+0800 INFO crawler/crawler.go:82 Loading and starting Prospectors completed. Enabled prospectors: 1

2020-06-13T07:06:49.190+0800 INFO [monitoring] log/log.go:97 Starting metrics logging every 30s

2020-06-13T07:06:49.191+0800 INFO log/harvester.go:216 Harvester started for file: /tmp/logs/a.log

#Harvester started for files: /tmp/logs/a.log 表示已经感知到我们设置的log文件现在我们往a.log里写内容

[root@VM_IP_centos logs]# echo "hello" >> a.log

终端输出:

{

"@timestamp": "2020-06-12T23:08:14.192Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.2.4"

},

"message": "hello",

"prospector": {

"type": "log"

},

"beat": {

"name": "VM_IP_centos",

"hostname": "VM_IP_centos",

"version": "6.2.4"

},

"source": "/tmp/logs/a.log",

"offset": 6

}- Filebeat 自定义tag:

filebeat.prospectors:

- type: log

enabled: true

paths:

- /tmp/logs/*.log

tags: "web"

output.console:

pretty: true

enable: true

echo "test2366666" >> a.log{

"@timestamp": "2020-06-12T23:35:31.364Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.2.4"

},

"source": "/tmp/logs/a.log",

"offset": 40,

"message": "test2366666",

"tags": [

"web"

], #多一个tag字段,这样的话,可以用来给日志打标签

"prospector": {

"type": "log"

},

"beat": {

"name": "VM_16_5_centos",

"hostname": "VM_16_5_centos",

"version": "6.2.4"

}

}- 设置自定义字段

filebeat.prospectors:

- type: log

enabled: true

paths:

- /tmp/logs/*.log

tags: "web"

fields:

from: "mobile" #关键在这里

output.console:

pretty: true

enable: true{

"@timestamp": "2020-06-12T23:52:12.102Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.2.4"

},

"offset": 54,

"message": "test777777777",

"tags": [

"web"

],

"prospector": {

"type": "log"

},

"fields": {

"from": "mobile" #效果

},

"beat": {

"version": "6.2.4",

"name": "VM_16_5_centos",

"hostname": "VM_16_5_centos"

},

"source": "/tmp/logs/a.log"

}filebeat.prospectors:

- type: log

enabled: true

paths:

- /tmp/logs/*.log

tags: "web"

fields:

from: "mobile"

fields_under_root: true #自定乐字段挂在根节点

output.console:

pretty: true

enable: true{

"@timestamp": "2020-06-12T23:54:04.997Z",

"@metadata": {

"beat": "filebeat",

"type": "doc",

"version": "6.2.4"

},

"message": "test888",

"source": "/tmp/logs/a.log",

"tags": [

"web"

],

"prospector": {

"type": "log"

},

"from": "mobile", #效果

"beat": {

"name": "VM_16_5_centos",

"hostname": "VM_16_5_centos",

"version": "6.2.4"

},

"offset": 62

}- 将Filebeat 结果输出到elasticsearch,前面我们有讲过如何安装es: https://www.clarkhu.net/?p=7191

filebeat.prospectors:

- type: log

enabled: true

paths:

- /tmp/logs/*.log

tags: "web"

fields:

from: "mobile"

fields_under_root: true

setup.template.setting:

index.number_of_shards: 3 #索引的分片数

output.elasticsearch: #关键--输出到es

hosts: ["es_ip:9200"]- 再次启动filebeat

[root@VM_IP_centos filebeat-6.2.4]# ./filebeat -e -c test.yml

2020-06-13T08:10:11.886+0800 INFO instance/beat.go:468 Home path: [/opt/filebeat-6.2.4] Config path: [/opt/filebeat-6.2.4] Data path: [/opt/filebeat-6.2.4/data] Logs path: [/opt/filebeat-6.2.4/logs]

2020-06-13T08:10:11.889+0800 INFO instance/beat.go:475 Beat UUID: d29dba4a-be63-483a-8d15-35d78f1fa68e

2020-06-13T08:10:11.889+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.4

2020-06-13T08:10:11.889+0800 INFO elasticsearch/client.go:145 Elasticsearch url: http://es_ip:9200 #这里表示已经设置写入es成功

2020-06-13T08:10:11.890+0800 INFO pipeline/module.go:76 Beat name: VM_16_5_centos

2020-06-13T08:10:11.904+0800 INFO instance/beat.go:301 filebeat start running.

2020-06-13T08:10:11.904+0800 INFO registrar/registrar.go:110 Loading registrar data from /opt/filebeat-6.2.4/data/registry

2020-06-13T08:10:11.905+0800 INFO registrar/registrar.go:121 States Loaded from registrar: 1

2020-06-13T08:10:11.905+0800 INFO crawler/crawler.go:48 Loading Prospectors: 1

2020-06-13T08:10:11.905+0800 INFO [monitoring] log/log.go:97 Starting metrics logging every 30s

2020-06-13T08:10:11.911+0800 INFO log/prospector.go:111 Configured paths: [/tmp/logs/*.log]

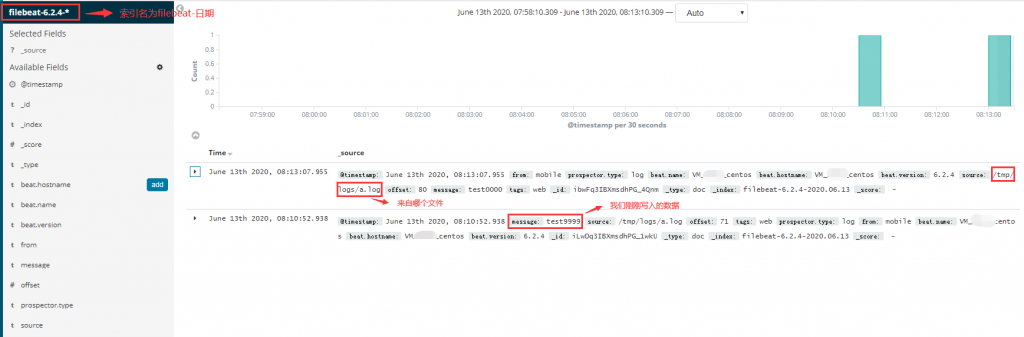

2020-06-13T08:10:11.911+0800 INFO crawler/crawler.go:82 Loading and starting Prospectors completed. Enabled prospectors:- 我们来接着写一条:

[root@VM_IP_centos logs]# echo "test9999" >> a.log- 我们可以通过kibana来查看

- 最后我们来说说Filebeat的工作原理:

Filebeat由两个主要组件组成:prospector(探测器)和havester(收割机)

- prospector:

1.prospector负责管理havester并找到所有要读取的文件来源

2.如果输入类型为日志,则查找器将查找路径匹配的所有文件,并为每个文件启支一个harvestor

3.filebeat目前支持两种prospector类型:log和stdin

- havester: (文章前面我们有介绍,可以看下上面的内容)

1.负责读取单个文件内容

2.如果文件在读取时被删除或重命名,filebeat将继续读取文件

- Filebeat如何保持文件的状态(从哪行日志开始读)

1.Filebeat保存每个文件的状态并经常将状态刷新到磁盘上的注册文件中

2.该状态用于记住havester正在读取的最后偏移量,并确保发送所有日志行

3.如果输出无法访问,filebeat会跟踪最后发送的行,并在输出再次可用时继续读取文件

4.在Filebeat运行时,每个prospector内存中也会保存的文件状态信息,当重新启动filebeat时,将使用注册文件的数据来重建文件状态,filebeat将每个havestor在从保存的最后偏移量继续读取

文件的状态保存在哪呢?

/opt/filebeat-6.2.4/data #filebeat的data目录下

vim registry

[{"source":"/tmp/logs/a.log","offset":80,"timestamp":"2020-06-13T08:18:13.030292935+08:00","ttl":-1,"type":"log","FileStateOS":{"inode":894391,"device":64769}}

#offset表示偏移量- Module的使用,这部分暂时略,后续再写一篇相关的文章